Microscope images that have been altered or duplicated can appear in research papers.Credit: Universal Images Group via Getty

The world’s largest science publishers are teaming up to discuss how to automatically flag altered or duplicated images in research papers. A new working group — the first formal cross-industry initiative to discuss the issue — aims to set standards for software that screens papers for problematic images during peer review.

“The ultimate goal is to have an environment that helps us, in an automated way, to identify image alterations,” says IJsbrand Jan Aalbersberg, who is head of research integrity at the Dutch publishing giant Elsevier. Aalbersberg is chairing the cross-publisher working group, set up by the standards and technology committee of the STM, a global trade association for publishers, based in Oxford, UK. The group began meeting in April, and includes representatives from publishers including Elsevier, Wiley, Springer Nature and Taylor & Francis.

Meet this super-spotter of duplicated images in science papers

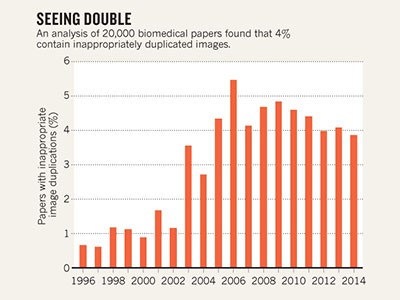

Journal editors have long been concerned about how best to spot altered and duplicated images in papers, which can be the result of honest mistakes, efforts to improve the appearance of images (such as altering contrast or colour balance), or fraud. In 2016, a manual analysis of more than 20,000 biomedical papers led by microbiologist Elisabeth Bik, now a consultant image-analyst in Sunnyvale, California, suggested that as many as 4% of them might contain problematic image duplications.

So far, however, most journals haven’t employed image-checkers to screen manuscripts, saying that it is too expensive or time-consuming; and software that can screen papers on a large scale hasn't been available.

The new group aims to lay out the minimal requirements for software that spots problems with images, and how publishers could use the technology across hundreds of thousands — or even millions — of papers. It also wants to classify the “types and severity of image-related issues” and to “propose guidelines on what types of image alteration is allowable under what conditions”, according to the STM’s description of the group. The latter phrase refers to what different disciplines find acceptable for the presentation of images, and how modifications — if permitted — should be transparently declared by authors, Aalbersberg says. He adds that, although many publishers are individually trialling software already, cross-industry discussions on common standards could take at least a year.

Software trials

In the past few years, some publishers have been trialling image-checking software. The firms LPIXEL in Tokyo and Proofig in Rehovot, Israel, both say that publishers or institutes can upload a research paper to their desktop or cloud software and, in 1–2 minutes, automatically have its images extracted and analysed for duplications or manipulations, including instances in which parts of images have been rotated, flipped, stretched or filtered. Neither firm would publicly name clients, but both said they had paying customers among science publishers and research institutes.

Another firm that checks images using software is Resis, in Samone, Italy. And an academic group led by Daniel Acuna at Syracuse University in New York is also developing software to compare images across multiple papers, which he says is being trialled by institutions and a publisher.

Researchers have finally created a tool to spot duplicated images across thousands of papers

Large publishers need software that can deal with a high throughput of papers, can plug directly into their peer-review processes, and, ideally, can compare a large number of images across many papers at once — a much more computationally intensive task than checking one paper. “Current technologies are not yet able to do this type of thing at a large scale,” Aalbersberg says. “Everybody realizes this is important. We are almost there but we are not there yet.”

‘Industrialized cheating’

Catriona Fennell, who heads publishing services at Elsevier and is in the new working group, says Elsevier has been growing more concerned by indications of what she calls “industrialized cheating” in a small minority of papers — disturbing similarities between images and text in multiple papers from different groups that are potentially being churned out to order.

This year, Bik and other image detectives flagged more than 400 papers, across a variety of journals and publishers, which they say contain so many similarities that they could have come from a single ‘paper mill’, a company that produces papers on demand.

Problematic images found in 4% of biomedical papers

Similarities between papers are difficult to flag during peer review, Fennell notes, not only because most peer reviewers aren’t looking for these kinds of problems, but also because many papers can be under review simultaneously — and confidentially — at different journals. Ultimately, publishers would need a shared database of images to check for re-use between papers.

In 2010, publishers agreed to deposit the text of research papers into a central service called CrossCheck, so that journals could use software to check submitted manuscripts for plagiarism. “We are going to need the same collaboration for images,” says Fennell. Once the software is ready, “I’m convinced that is going to happen,” Aalbersberg says.

Bik, who continues to find image problems in published papers, says software that could spot image problems in manuscripts during peer review would be a “wonderful” development. “Hopefully I will have less work to do,” she says.

Meet this super-spotter of duplicated images in science papers

Meet this super-spotter of duplicated images in science papers

The science institutions hiring integrity inspectors to vet their papers

The science institutions hiring integrity inspectors to vet their papers

Problematic images found in 4% of biomedical papers

Problematic images found in 4% of biomedical papers

Researchers have finally created a tool to spot duplicated images across thousands of papers

Researchers have finally created a tool to spot duplicated images across thousands of papers