Building artificial connectomes

Artificial connectomes have the potential to teach behavior into a system without the need to remove behavior from established animal connectomes.

Illustration of connections (source: geralt via Pixabay)

Illustration of connections (source: geralt via Pixabay)

I have emulated the C elegans connectome (i.e. how a nervous system is wired) in robotics and shown that the connectome with a simple neuron model displays behaviors similar to the animal itself. With further emulations of other animal connectomes (e.g., Zebra fish), I have discovered certain rules and paradigms that govern connectomic engineering and that animal behavior is highly integrated into the connectomic structure. This means that least at this time, it’s virtually impossible to remove unwanted behaviors.

Using animal connectome emulation, unwanted behaviors such as defensive mechanisms, will make it difficult to create robots and applications that are conducive to our desires and needs. For example, one would not want to create a robotic puppy for their child that bites. We would probably more want the robotic puppy to simply shut down and stop playing rather than chew on our children. This has led me to create artificial connectomes with the hope that we can teach behavior into the system and not have to try and remove behavior from existing and established animal connectomes. This product is what I label connectomic artificial intelligence.

Connectomic Rules

There are certain rules to connectomic engineering that allow the emulation of sensory to cortical to motor output behaviors. These are the rules that I have observed, and many have been noted by other connectomic scientists. Some observations may be obvious and simple, but these rules set connectomic AI apart from other traditional artificial intelligence paradigms such as deep learning and Hierarchical Temporary Memory (HTM), and I believe is the best opportunity for humans to recreate our own intelligence in a computer system or network.

Here are connectomic observations and rules in no particular order. Please note that these are purely connectomic properties and do not place emphasis on the intracellular activity of individual neurons:

- The connectome is always on. Our nervous systems never shut down; they are always active. Having emulated animal connectomes, I once ran a test of an emulated connectome with some initial sensory input that started the cortical processing; and after 24 hours, I turned it off since it was obvious that the neuron-to-neuron activity would never end. This creates computing issues as well as network issues.

- The connectome is highly recursive, and in many cases, it is exponentially recursive. If we start with a cortical neuron and map out the connections to other neurons, we see that there are a few of those postsynaptic neurons that connect back to the originating neuron. This set of neurons connects to another set of neurons, and from that next set, many connect back to the originating neuron. This goes out to more layers and sets; and at each level, there are a number of connections back to the originating neuron. From my data analysis, in many cases, we see that this recursive connectivity grows exponentially back to the originating neuron. I believe this is why the connectome is always on. Further work with high-level organisms has shown that the recursive nature of the connectome is more so in local regions of nervous systems and present, but not as connected, between regions of cortex.

- There are two basic temporal aspects in any nervous system:

- The polarization timing where if a neuron, real or simulated, is left alone or not stimulated over a certain timespan, it polarizes or goes to a negative (or zero) value as an internal function of time; i.e. the all or none response.

- The network topology of the connectome is a function of time as well. If certain neurons fire before others, a set of behaviors will occur; but if a set of those same neurons fire in a different sequence, we often see other behaviors as an external, network timing.

- There is a dampening effect displayed on muscle output. When we apply connectomes, animal or artificial, to robotics, we observe a lot of neural muscular activity where values are being sent to the motors (muscles) continuously and rapidly, but we do not see motors react in a jerky or erratic manner. We observe the motor activity as smooth and continuous. I speculate that our cerebellum plays a role in this smoothing of motor output, along with the coordination of cortex-to-motor response.

- There is a left-to-right component to all (or most) nervous systems. This is obvious but rarely employed, if ever, when creating artificial intelligence, and there is an impact on how these Left-Right edges are configured. From data analysis and further emulation of artificial connectomes, we find that connectivity between Right-Right or Left-Left neurons is about two-thirds more than Left-Right or Right-Left connectivity. These hemispheric connections are very important in perceptual direction as well as in sequencing motor output.

Building Artificial Connectomes

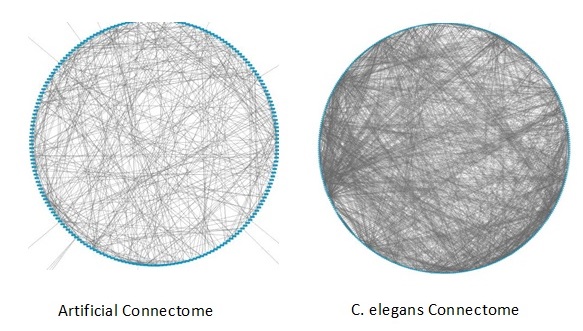

Using these observations, I wanted to develop a connectome from scratch that could learn and adapt to whatever sensory input I wanted it to consume. I did some backward data analysis on motor-neuron-to-sensory-neuron pathways using animal connectomes and realized some patterns emerging. I could see the way in which neurons were connected was much like the concept purported by the Watts-Strogatz small world algorithm. I created a program that allowed me to construct a connectome using different parameters in the Watts-Strogatz algorithm and ran a simple classification test with each model created.

Running approximately 100 trials using different configurations, I found that there is a fine line between too much connectivity and too little connectivity. Too much would result in a model that would run continuously once stimulated but would output to all motor neurons/muscles simultaneously and constantly without any discrimination; for example, all muscles were active all the time. Too little connectivity would result in the connectome taking a very long time to activate any muscle activity, if any in some cases, and the system would cease to self-stimulate once any sensory stimulation was stopped. The mean degree matched against the rewiring probability was very narrow in nature, with a resulting connectome that would self-simulate once it had a short amount of sensory input, and the motor neuron to muscle output was discriminatory determined by the sensory input.

Theoretical Artificial Nervous Systems

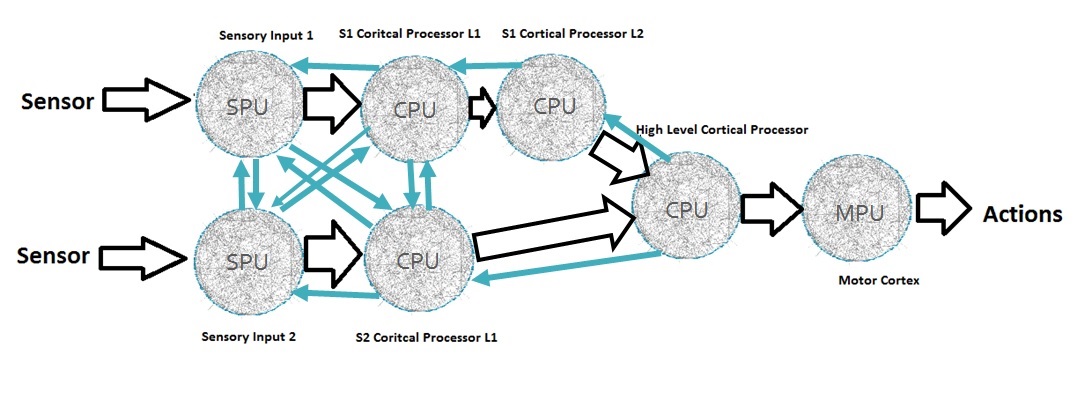

Each artificial connectome as shown in Figure 1-1 can be connected to other connectomes; and I can create multiple regions, each highly recurrent, with a large degree of interconnectivity between the regions making recurrent interconnections. Therefore, in theory, we can expand our model of a simple artificial connectome to create an artificial brain as shown in Figure 1-2.

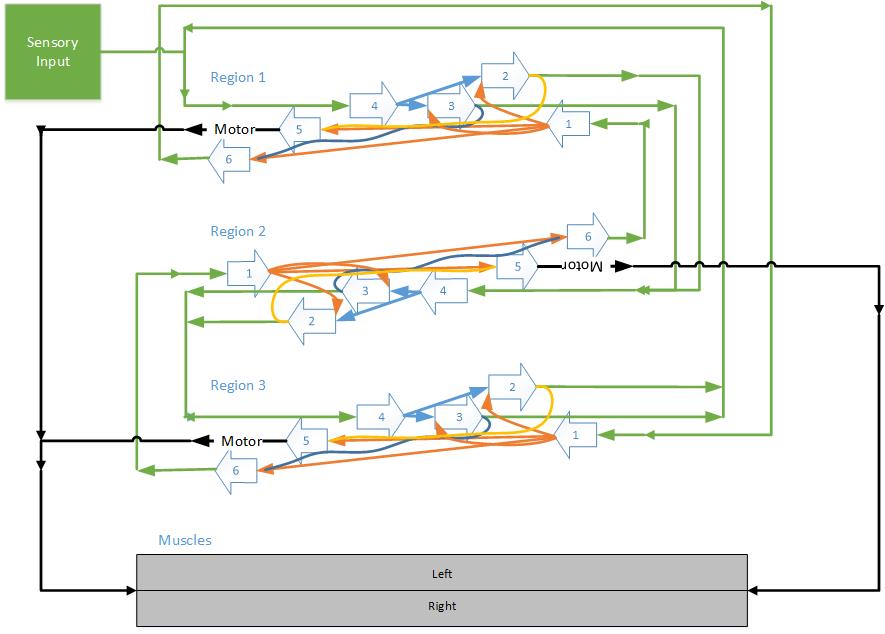

After experimenting with an artificial brain concept, I was reminded of efforts I developed about 10 years ago utilizing the six-layer concept of a cortical column of the human cortex. Using the concept of the interconnectivity of these cortical columns, I created three cortical regions, each containing several hundred cortical columns, and interconnected between the layers in a rudimentary fashion as defined by the functions of each layer. I then added sensory neurons that connected directly to the first region of cortical columns and left-right motor neurons that connected to sets of left-right muscles, as shown in Figure 1-3. The six-layer approach worked very well with simple classification tests using a 10 × 10 grid of pixels representing numeric values.

Computational Methods

In each phase of the research, I use adjacency matrices, where each row represents a connectivity (edge) vector that can be used to increase weighted values as individual neurons are fired. In addition to an adjacency matrix to contain the connections and weights between those connections, there are five other arrays where each cell of the array represents a specific neuron. Those five arrays are:

- An array to contain the accumulation of weights

- An array to contain a timestamp of the last time a neuron was stimulated

- An array containing the threshold of a particular neuron

- An array containing a “learning” accumulator

- An array containing a “growth” accumulator (note that the learning and growth accumulators trigger plasticity in the system)

As with my early experiments in emulating animal connectomes, I use an internal temporal aspect of each neuron so that if a neuron has not been stimulated in a few microseconds, all accumulators (i.e., weight, learning, and growth) are set to zero and the threshold is set to a default value. For a neuron to fire (to reach its action potential), the weight accumulation must exceed the threshold. Any time a neuron fires, its weight accumulation is set to zero (I have also experimented with negative numbers, but the outcome is pretty much the same). The weight vector of the adjacency matrix is added to the accumulation array each time a neuron fires, and the weights are accumulated. A timer function constantly runs to check when accumulation is greater than the threshold and fires each of the neurons that meets this criteria.

To simulate synaptic fatigue, each time a neuron fires, the threshold for that neuron is incremented by a small amount so that a constant activation of a neuron gets a little harder to exceed the threshold. As mentioned, the threshold is reset to a default value if a neuron does not fire within a set time frame.

Plasticity is formed in two ways: learning and growth. A “learning” array is incremented when a neuron fires; and if that learning accumulation exceeds a learning threshold, weight is increased on the adjacency matrix so where a connection between neuron A to B may have a weight of one (1), it could be increased by one so now it would be two (2). This simulates connectivity strength. A “growth” array is incremented when the learning accumulation exceeds the learning threshold. When the growth accumulation exceeds a growth threshold, I add a synaptic connection to a nearby neuron, which simulates synaptic growth. As mentioned, if a specified time has passed since a neuron was stimulated, both the learning and growth accumulators are set to zero; that is to say, all accumulation is a function of time.

Conclusion

This technology can be used for unsupervised learning in domain-agnostic environments. The real goal is real-time, streaming information that allows the AI to organize, optimize, and make predictions, then test outcomes against these hypotheses for its own reinforcement. There is still much research to do; but having a framework, and having successfully completed simple tests, my goal is to apply this technology to develop reaction to more complex input both from an application perspective and a robotic perspective. On the application side, there are many mundane tasks that can be automated with intelligence and with a system that can learn while unsupervised. Good examples are functions that invoke business continuity and active auditing. On the robot side, I am working to create an open system with many sensors to be able to navigate through hostile environments without human intervention where the robot can operate on its own to carry out primary objectives while avoiding harm. This technology can be used for many mundane, robotic tasks, including weed removal, picking fruit and vegetables to maintaining your lawn. Imagine a robot lawn mower or trimmer that senses a sprinkler head and knows not to run over it, not because you have programmed it to not run over sprinkler heads, but because the robot can sense there is an unidentified object that it has no reason or purpose to run over.

For us to advance AI beyond the current status quo, we need to explore new ways to give machines intelligence beyond programming rules and beyond current artificial neural networks. I believe connectomic AI holds a key to making this happen.